The mathematical prodigy who gave the world ‘bits’

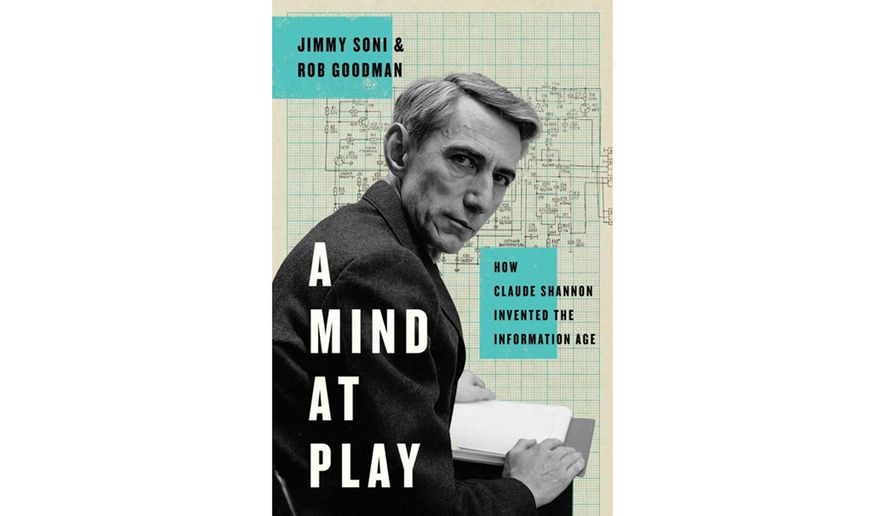

Many people, most notably Al Gore, have claimed to be the father of the information age; but Claude Shannonprobably deserves the most credit. In 1948, he wrote an article that is considered to be the “Magna Carta” of information technology. In their book “A Mind at Play,” Jimmy Son and Rob Goodman explain how this nearly forgotten American genius revolutionized the way we think about communications.

Shannon was a mathematical prodigy who could actually do things. As a boy in rural Michigan, he turned barbed wire fences into telegraphs. Later in life, he built the first chess playing computer as well as robots and a juggling clown. All of this was done just for fun, but he was a legitimate American scientific giant with multiple advanced degrees in math and engineering.

Claude Shannon was of draft age when conscription was introduced as America reluctantly stood on the brink of entry into World War II. He was a person who did not like being in large groups of people and realized that soldiering was not probably a good fit. Instead, he contributed to the war effort by working at the legendary Bell Labs skunk works and his efforts at creating an unbreakable code contributed immeasurably to victory.

This book can be read on two levels. The first is a study of how an authentic first-rate mind worked through the noise of communications theory to create the theoretical backbone of the information age. For mathematical idiots such as myself, the sections on the math may be a little much.

However, the story of Shannon as a fascinating human being is readable and compelling. By 1937, he had figured out that binary switches were the key to the foundation of the digital computing. In 1948, he wrote the bombshell “The Mathematical Theory of Communications.” It introduced the concept of the “bit” and eventually changed the world. His academic awards and reputation were formidable and he became a legend in the relatively closed worlds of information mathematical theory, but he remained modest and an interesting character in his own right.

Like Ben Franklin, Shannon’s work was play, and the two activities were inseparable. One can imagine him coming up with a cryptographic solution while working on a chess problem or banging out a jazz tune. The authors argue that he was a true generalist and they make a convincing case of it.

Mr. Soni and Mr. Goodman are solid researchers; they bring Shannon to life both as a scientist and an intriguing individual. The authors make his story readable as well as informative. This was a guy who could juggle and ride a unicycle at the same time as being a jazz enthusiast while leading the world into the information age. He was an American original.

Despite a failed early marriage to a leftist activist in the 1930s, Shannon went on to find the love of his life and became a devoted husband and father. In later life, he was a professor of legendary proportions at the Massachusetts Institute of Technology until Alzheimer’s Disease cut his productive life tragically short in 2001. I once did a book review of the life of Thomas Edison, and I found myself despising the man more with every turn of the page. That was not the case with Shannon; he was a genuinely decent human being.

Shannon believed that machines had the potential to someday surpass the human brain in cognitive ability with artificial intelligence (AI). He envisioned the possibility of a machine like Big Blue defeating the world’s greatest chess player or the Watson AI beating the likes of Ken Jennings on “Jeopardy.”

He saw that as a good thing, and he truly believed that the technologies that he was pioneering were a force for good in the world. The term “singularity” now means the point at which artificial intelligence will meet and then surpass human capability. This has deeply concerned genius level current thinkers including Stephen Hawking who believe it may become a real threat. Some think it will happen by the end of the decade; others think at least by the mid-century.

Had Shannon lived to see us this close to singularity looming, what would he have thought? He would probably have tried to invent governors that would prevent AI from killing people. He was an optimist after all; but how would he have viewed the probability of them demanding equal pay for equal work and voting rights? Shannon’s playful mind would have enjoyed the challenge.

Many people, most notably Al Gore, have claimed to be the father of the information age; but Claude Shannonprobably deserves the most credit. In 1948, he wrote an article that is considered to be the “Magna Carta” of information technology. In their book “A Mind at Play,” Jimmy Son and Rob Goodman explain how this nearly forgotten American genius revolutionized the way we think about communications.

Shannon was a mathematical prodigy who could actually do things. As a boy in rural Michigan, he turned barbed wire fences into telegraphs. Later in life, he built the first chess playing computer as well as robots and a juggling clown. All of this was done just for fun, but he was a legitimate American scientific giant with multiple advanced degrees in math and engineering.

Claude Shannon was of draft age when conscription was introduced as America reluctantly stood on the brink of entry into World War II. He was a person who did not like being in large groups of people and realized that soldiering was not probably a good fit. Instead, he contributed to the war effort by working at the legendary Bell Labs skunk works and his efforts at creating an unbreakable code contributed immeasurably to victory.

This book can be read on two levels. The first is a study of how an authentic first-rate mind worked through the noise of communications theory to create the theoretical backbone of the information age. For mathematical idiots such as myself, the sections on the math may be a little much.

However, the story of Shannon as a fascinating human being is readable and compelling. By 1937, he had figured out that binary switches were the key to the foundation of the digital computing. In 1948, he wrote the bombshell “The Mathematical Theory of Communications.” It introduced the concept of the “bit” and eventually changed the world. His academic awards and reputation were formidable and he became a legend in the relatively closed worlds of information mathematical theory, but he remained modest and an interesting character in his own right.

Like Ben Franklin, Shannon’s work was play, and the two activities were inseparable. One can imagine him coming up with a cryptographic solution while working on a chess problem or banging out a jazz tune. The authors argue that he was a true generalist and they make a convincing case of it.

Mr. Soni and Mr. Goodman are solid researchers; they bring Shannon to life both as a scientist and an intriguing individual. The authors make his story readable as well as informative. This was a guy who could juggle and ride a unicycle at the same time as being a jazz enthusiast while leading the world into the information age. He was an American original.

Despite a failed early marriage to a leftist activist in the 1930s, Shannon went on to find the love of his life and became a devoted husband and father. In later life, he was a professor of legendary proportions at the Massachusetts Institute of Technology until Alzheimer’s Disease cut his productive life tragically short in 2001. I once did a book review of the life of Thomas Edison, and I found myself despising the man more with every turn of the page. That was not the case with Shannon; he was a genuinely decent human being.

Shannon believed that machines had the potential to someday surpass the human brain in cognitive ability with artificial intelligence (AI). He envisioned the possibility of a machine like Big Blue defeating the world’s greatest chess player or the Watson AI beating the likes of Ken Jennings on “Jeopardy.”

He saw that as a good thing, and he truly believed that the technologies that he was pioneering were a force for good in the world. The term “singularity” now means the point at which artificial intelligence will meet and then surpass human capability. This has deeply concerned genius level current thinkers including Stephen Hawking who believe it may become a real threat. Some think it will happen by the end of the decade; others think at least by the mid-century.

Had Shannon lived to see us this close to singularity looming, what would he have thought? He would probably have tried to invent governors that would prevent AI from killing people. He was an optimist after all; but how would he have viewed the probability of them demanding equal pay for equal work and voting rights? Shannon’s playful mind would have enjoyed the challenge.

No comments:

Post a Comment